The Future of Cinema talk, 2025

DOUG GOODWIN: Thank you, everyone. Thank you to Swissnex. Thank you to all of you for being here today. My name's Doug Goodwin, and I've had the unique opportunity to be teaching AI to students at three very different places, I guess, very different communities.

I taught a class here at UCLA in AI last term. I teach a class in AI—as we just heard about—experimental animation at CalArts, and I've also taught a similar class called "Machine Learning for Artists" at the Claremont Schools, Scripps College. Each community has been very different, and I've had to deal with the fact that AI changes every time you open it. If you have the pleasure of trying to teach ComfyUI or any of these other programs that sometimes don't even last more than a month, you know that it's a river you step into more than once and it's never the same twice. I don't really recommend that unless you have a taste for things changing, never being the same.

However, we're here now, and it is possible for artists to actually use these tools. And I think it is important. We've had several discussions today about whether or not we should even get involved in this as artists. Should we fight back? Should we protest? Should we not be a part of this and contribute to all of the environmental problems that are being brought on by having millions of servers spun up?

I think it is important. I think the door is open and it's not going to close again easily, and it's important for us to know when it is, to try it out. And in my case, to teach it to anyone who's willing to come and learn about it, but also to make work with it and make work that is critical in some way.

So I have a problem with AI when it tries to fake authenticity. Today's generative AI makes very convincing images. It does this largely by persuasion. This AI is making images that adopt the forms and texture of film and photography. AI is working to persuade the audience by adopting the material history of recorded media. It's trying to persuade us that it's authentic by taking on the authority of photography. I get a lot of mileage out of this as an educator, which is my job, but I do have a beef with this as an artist.

Maybe it's my theater training. I kind of grew up with Brecht and Beckett, and I used every available means to show the reality of a subject and strip away the trappings of theater. I believe then, and still today, that one of the most interesting things you can see in a theater is someone sweeping the stage. I like to see the ladders. And if you've ever studied Brechtian Theater, you can kind of know what I'm talking about. I don't want someone putting on airs that belong to things that are outside of the play for some reason—or photography or film.

Let's do the best job with what we have. Let's not doll it up. Let's not make the lobby look nicer than it should be. Let's not draw attention from things that we feel are ugly or weak. Let's learn something and lean into the failure of the medium, whatever it is, and don't try to adopt the authority of another process.

AI can synthesize the grain structure of Tri-X film. It can take on the color cast of Polaroid film. It can take on VHS tracking errors, and it can take on the knobby texture of Kodak prints. And this kind of appropriation—it's stealing the visual language of one medium to bring authenticity to this new medium. Film grain says, "This was shot on film"—chemical emulsions, silver halide crystals, all these physical processes that leave specific marks on the media.

But AI is simulating these through statistical prediction, learning what that film grain looks like without any material process. So we have these layers of concealment going on. AI is hiding its artificiality beneath its photorealism, beneath the simulated analog artifacts, beneath the vernacular photography conventions. Each layer says, "This is authentic, this has history." But underneath, it's some kind of statistical synthesis that is faking the material traces of another media.

Maybe this is a matter of taste. Maybe let's call it bourgeois, if you like. The smooth AI serves the same function as academic painting in Courbet's time. It's technically impressive, it hides its construction, it maintains good taste, and makes images that appear naturally beautiful, effortless, authentic—images that don't show their making.

So I suggest that we adopt brittleness as a critique: brittle AI. It can be a weapon to critique the smooth, uncanny, synthetic images. Think about filmmaking and using Super 8 today. Like, you actually get a Super 8 camera, you get some Super 8 film from Pro8 locally, and you go and do that. Do you want to make a nostalgic movie? Because you are going to fall into that trap very easily. It may look like it's from the 1970s, may not differentiate itself, and it may just cause the audience to fall into a reverie of some kind. But you could use it to challenge some kind of Mad Men aesthetics. The medium's formal properties create a distance, and they let you examine these tropes critically, rather than reproduce them seamlessly.

Brittle AI can work the same way. Early models, limited models that show themselves—they can't fake film grain or Polaroid chemistry. They reveal their mechanisms, the synthesis, and this creates a kind of critical distance.

Courbet painted peasants at monumental scale. He showed bodies without idealization, more or less. The academy said it was too ugly to be serious art. Brittle AI can do the same work. It can show the ugly computational processes, it can refuse to borrow legitimacy from another medium, and it can reveal synthesis as labor.

But it's not just critique. These brittle images are beautiful. Their beauty comes from their apparent struggle to emerge—synthesis fighting to resolve itself, statistical prediction trying to cohere, like watching crystallization. We struggle to see the world clearly and to share what we see. The same struggle seems to be happening in digital media. This isn't metaphor—it's recognition. The computational process of trying to resolve an image from noise mirrors our perceptual and expressive struggle. We recognize ourselves in that honest difficulty of making something real.

This is what I call authentic synthesis: synthesis that doesn't clean up after itself, that shows the computational mess rather than hiding it under fake film grain. Synthesis that shows its labor, its uncertainty, its material reality as statistical process.

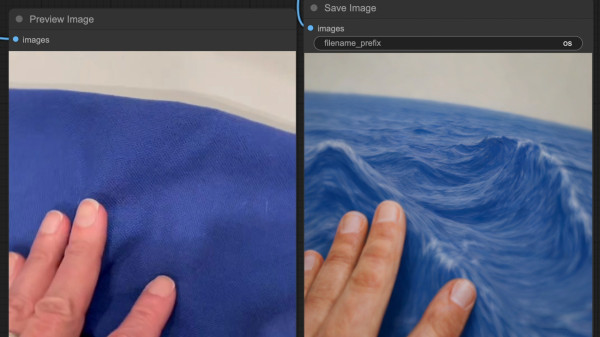

[Shows Digital Puppetry demonstration]

Let me show you. This is Digital Puppetry—real-time synthesis models I've rewritten to work with my own training data, performing live in front of a webcam. No simulated film grain. No fake VHS artifacts. No borrowed Polaroid chemistry. These images show what they are: real-time statistical prediction that reveals itself. Notice how the image struggles at the edges, how faces half-resolve. This isn't a bug—it's the model showing you its process, the statistical uncertainty it's working through in real-time.

I teach this at UCLA, CalArts, and Claremont. Students make their own models, train them on their own work, own their tools. These smaller models are more accessible than corporate AI. But more than that, students design the struggle. They set up generative engines to fail in intelligent ways.

One student scanned religious texts from several different languages—forcing the model to grapple with the range of letterforms and the sacred context of religious documents. How does AI interpret untranslatable scripts? Another shot closeup footage of turf and grass, then used it to generate ocean waves—exploiting the model's categorical confusion between textures and fluids. These aren't random errors. They're designed breakdowns that reveal how the model thinks, what it can and can't distinguish, where it struggles.

Students develop the ability to recognize: this is synthetic, here's how I can tell, here's what it's hiding. They understand how synthesis tries to fake material processes it doesn't have. This matters because we're drowning in synthetic images that fake authenticity. Seeing through their borrowed textures—that's critical literacy for contemporary visual culture.

I want to be clear: even working with our own models, we build on foundations of mass data scraping and tools trained without consent. This approach doesn't solve those ethical problems. But it refuses to hide inside them. It's critique from within complicity, not purity from outside.

High-end AI races toward perfect simulation—faking any medium's texture, trying to be indistinguishable from authentic photography. Brittle AI can't do this. In that inability—in visible synthesis, the struggle to emerge—we find both aesthetic and critique. This is authentic synthesis in practice. Not AI that fools us, but AI that helps us see what smooth images hide: underneath all those borrowed textures, there's no material process. Just statistical prediction faking a history it doesn't have.