Isabel MacGinnitie, 2024

DIY Prokudin-Gorskii

Project Proposal

Colorizing Black and White Film Photography: Comparing the Prokudin-Gorskii Method and Neural Networks

Summary

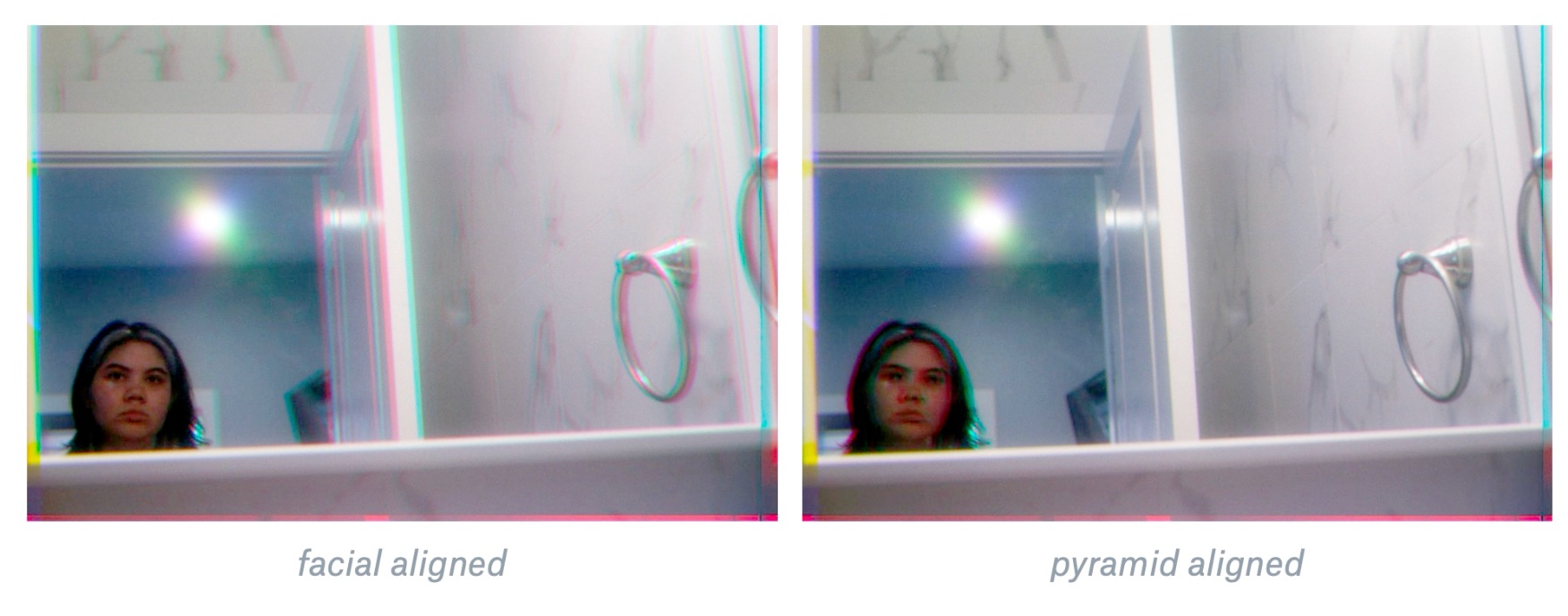

This project is an expansion of the Prokudin-Gorski lab, in two-fold. First, I am interested in recreating the three-color method with my own scenes using B&W film. Second, in collaboration with my SciComp class, I was interested in colorizing the same B&W scenes using AI. I would then compare how each method colorized the images in comparison to a color digital image of the scene.

The Neural Network

First, I downloaded Emil Wallner’s code at: https://blog.floydhub.com/colorizing-b-w-photos-with-neural-networks/ and read through his explanations and documentation.

I then adapted his simpler alpha and beta version (which were solely CNNs with no classifiers) to the Colab environment and my specifications, most notably having it load datasets and save results to my Google Drive.

I tested small batches of datasets with the beta version and ultimately chose to use:

a. Nvidia's StyleGAN dataset- 100,000 AI generated faces (though I only used 5,000 to train on) that were relatively diverse in race, ethnicity, age, and gender, at least in comparison to other face databases.

b. Unsplash- compiled by Wallner, about 10,000 artsy portraits/scenes

c. Stanford Background Dataset: around 800 images of outdoor scenes with something in the foreground

d. I experimented with the ImageNet dataset on the full version, but the images were so random that I ultimately chose not to use it. Their website was being updated at the time and it was impossible to choose specific subsets of the data, so I was only able to access their testing dataset. I compiled the following sets of images to test the colorizer on:

a. The scans of my unfiltered negatives

b. Selected Prokudin-Gorskii images: I chose 199 colorized images and their corresponding negatives to use mostly for testing, though I did experimentally use it to train some models. The Library of Congress does not have a great way to automatically download these images, so I manually opened each image I wanted and put its links in a spreadsheet to download and process in Colab.

c. ~500 Unsplash images

d. 1,000 Nvidia faces

e. Miscellaneous scanned black and white film that was similar to my other negatives and the Prokudin-Gorskii images

I prepared the sets of images into 256x256 images stored in moderately well organized Drive folders.

a. I automated this process with the “resize images.ipynb” notebook

b. For the Prokudin-Gorskii color and black and white images, I downloaded and resized the images straight from where the LOC hosted them to a Drive folder. This is done in “save and resize pg images.ipynb”

I adapted the full version (with classifier) to Google Colab. This was very painful and created a lot of issues, as Wallner’s code does not work on current versions of TensorFlow and Keras and breaks in very obscure ways. I spent many hours on StackOverflow and GitHub issue pages trying to find a solution, but was only able to discern the code relies on some depreciated versions of TensorFlow and Keras. Unfortunately, the way his code is written is not easily adapted to the significantly updated TF 2, so I was in a tough spot. While stressfully googling code snippets, I serendipitously stumbled upon the Kaggle project Image Coloring Using AutoEncoders, which was based off the same code and specifically required TensorFlow 1.14.0 and Keras 2.2.4. I also added a way to load previous models to further train and test as Colab can only take about 4,000 256x256 images before the RAM crashes.

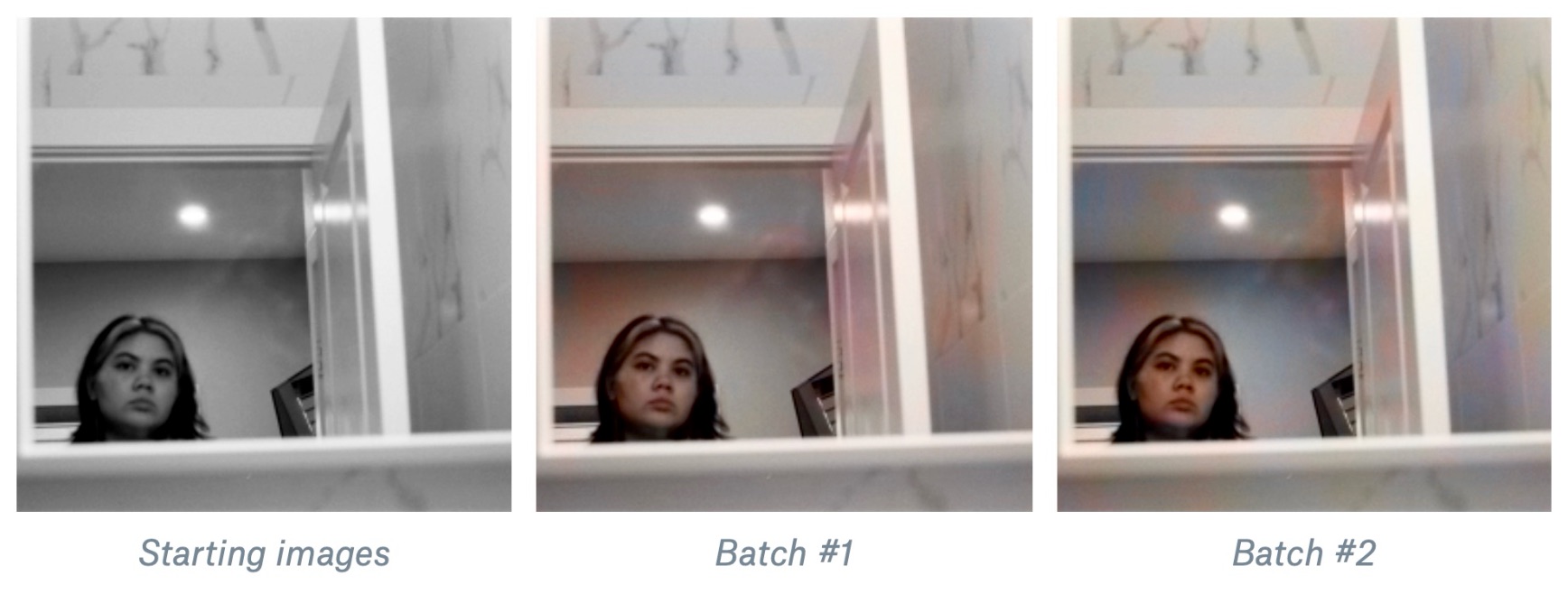

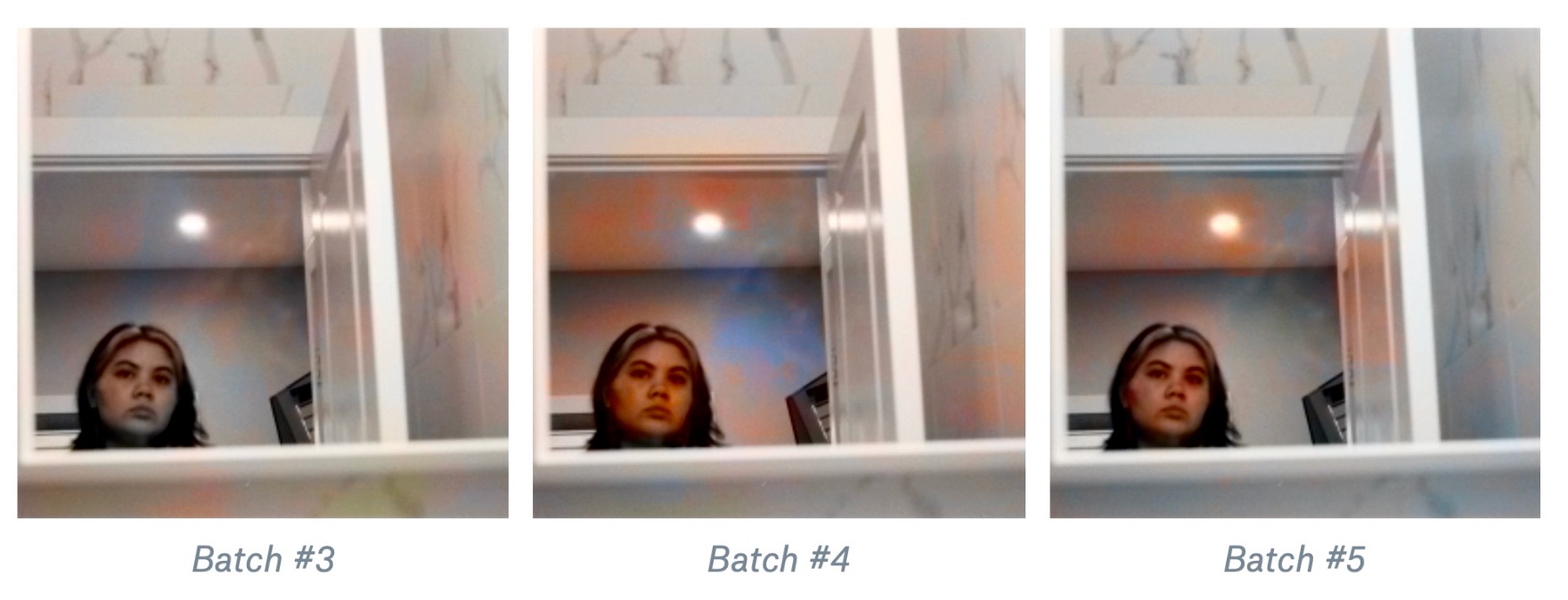

At this point, I began training my full model by running training sets in batches of 2000, which took around 2.5 hours per batch, with a further 0.5-1 hours to colorize the test set. However, I crucially did not randomize the order of my data.

After about a day and a half of training, I realized my model was significantly biased to the last dataset it trained on. At this point, I made the painful decision to rerun the model from scratch with randomly ordered data. I wrote some code that allows you to run the batches in random but non-repeating order, and spent another long day training the model. This time I divided the 15,007 training images into 5 batches of 3,000 (with the batch #5 being 3,007). For each set of 3000, I trained the model for 50 epochs, where each epoch runs on 30 images for 100 steps.

Here are is how each batch improved the coloring: