FP: Marcos Acosta, 2023

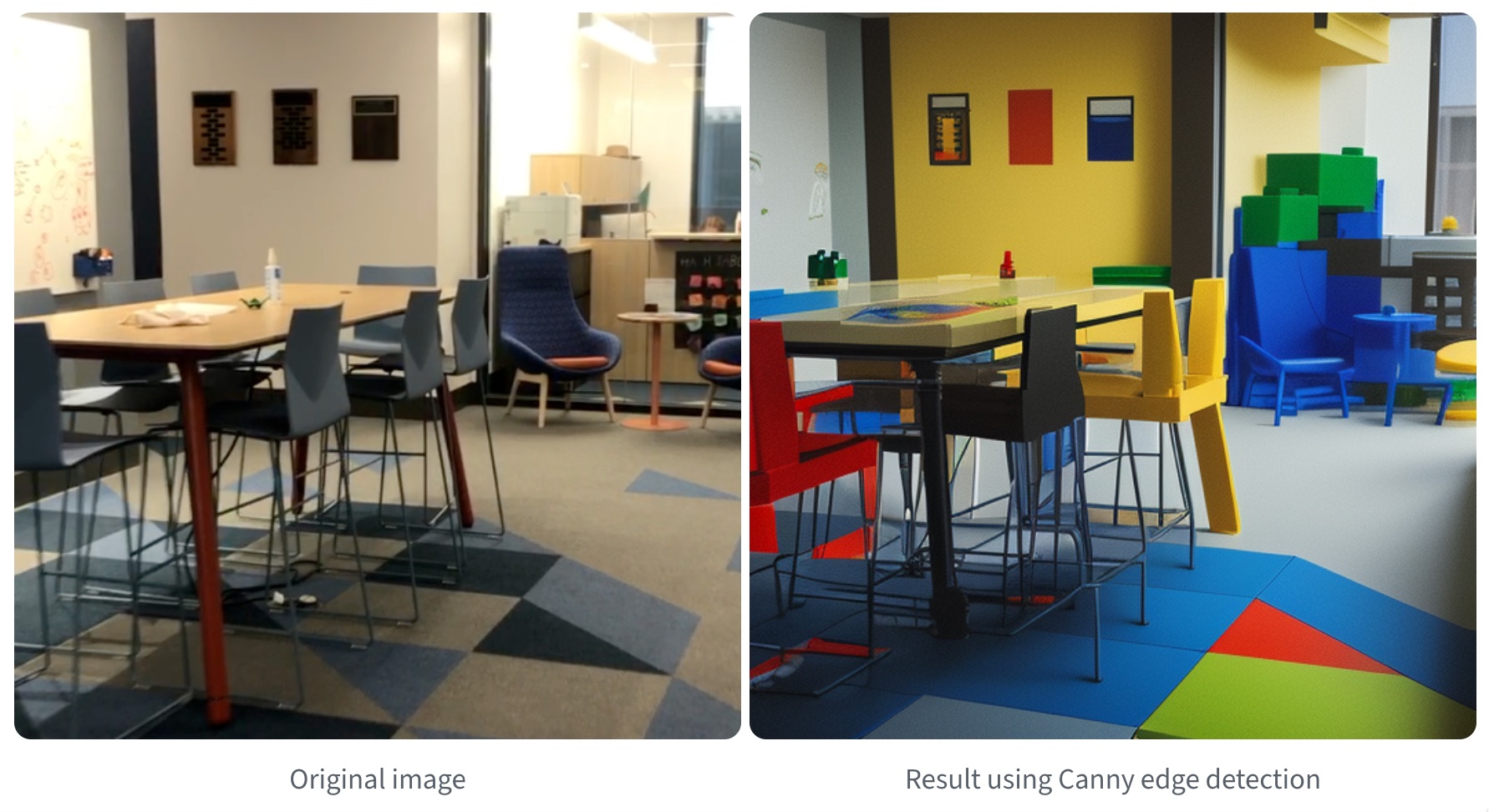

PROMPT: Make work that uses a ControlNet with Stable Diffusion to provide structure to realistic images and vice-versa (i.e. a photograph that provides structure to synthetic or cartoon images).

Abstract

We’re often used to thinking of models like pix2pix, StyleGAN, and Stable Diffusion as image (or sometimes video) models. However, I’m most interested in the thought of inhabiting the physical spaces imagined by these deep neural networks. What if I could walk through a Steele Hall built of Legos? Or walk through my room if it had been designed by Salvador Dali? Or through my hometown if it had been ravaged by climate change? The main idea of this project is to bring some of these fantasies (at least partially) into reality by leveraging text2img, img2img, and ControlNet as well as some custom scripting.

Minimum Viable Product (MVP) 1

Use the Stable Diffusion Web API to automatically process an actual video taken of the inside of a building to reimagine it e.g. as a building designed by MC Escher, perhaps downsampled to 15 FPS.

MVP 2

Use the same process as MVP 1, but implement a small interactive element. For example, record videos of looking around in different parts of a building, as well as the transitions to walk between them. Then, for the "looking around segments”, assuming I move the camera around in a 360 at a steady rate, I can calculate the angle of each frame in the video, and have some kind of input to decide to look right/left, as well as to walk to the next key point.

MVP 3

If I can get access to a USB camera, I can set it up so that it continually streams photos from the camera to Stable Diffusion and displays the result, which is probably the closest we can get to a real-time Dream Tour. If I can’t get a hold of a USB camera, I can FaceTime myself from my laptop and then use pyautogui to grab the part of my screen that’s showing my phone.